The Prompt Report: A Comprehensive Overview of Prompting Techniques

How many ways are there to prompt?!

Garbage in - garbage out! So influencing what you can is essential when working with LLMs aka better prompts, make a big difference.

Thanks to Sander Schulhoff et al and here the arxiv link there is now a proper survey if the current stage of prompting techniques ( 06.2024).

First what you don´t find

The paper does not pinpoint a single "best" prompting technique. Instead, it emphasizes that the effectiveness of a technique can vary significantly depending on the specific application and context. This nuanced understanding is critical for developers and end users, yes it is!

We are still in the days without proper vocabulary and stuff is not defined, so to have a paper with a detailed vocabulary comprising 33 terms, is helping to enhance the ontological clarity and aiding in the precise application of these techniques.

A Structured Taxonomy of Prompting Techniques

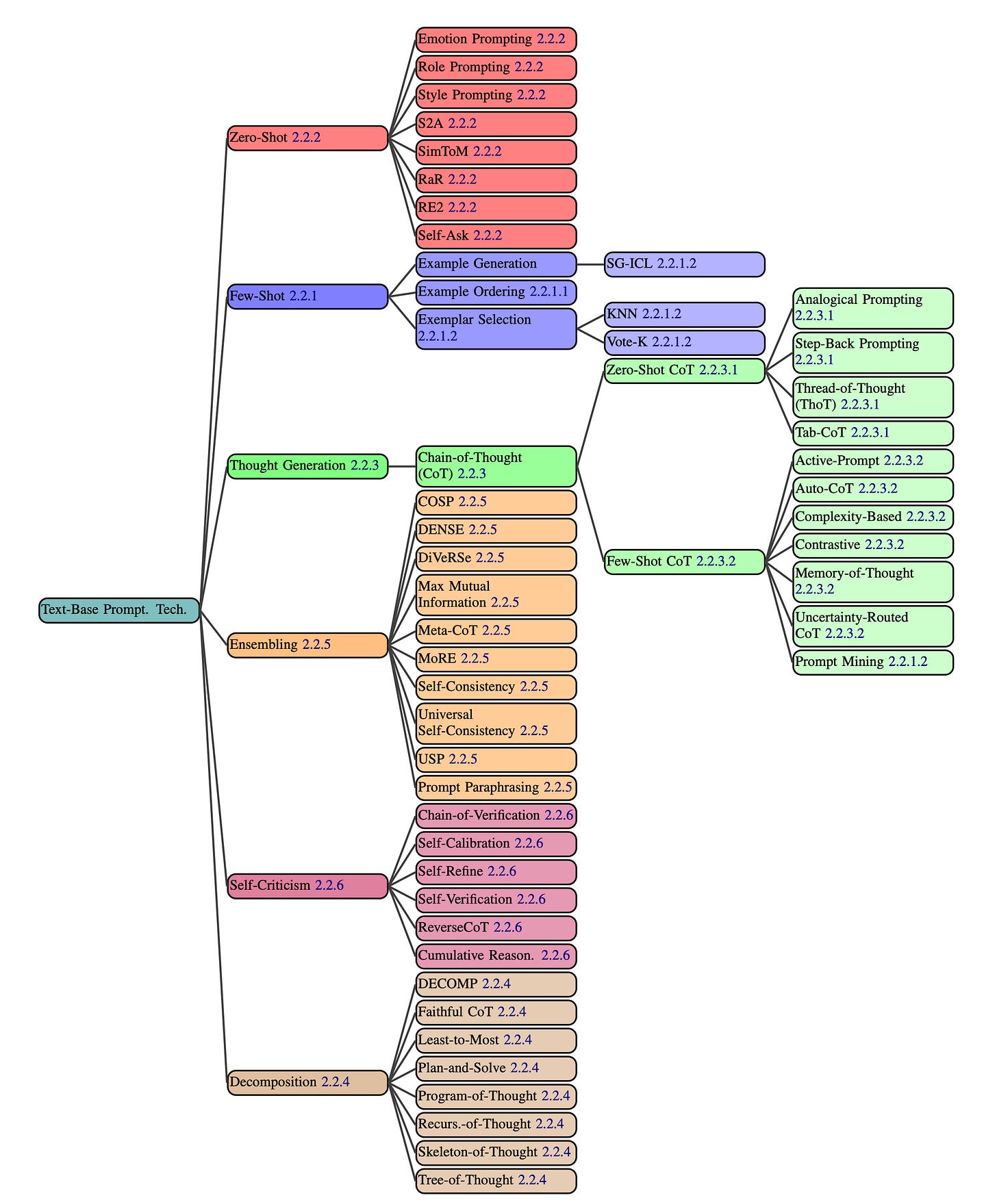

One of the primary contributions of this paper is the establishment of a structured taxonomy for prompting techniques. The authors have identified and categorized 58 text-only prompting techniques along with 40 techniques for other modalities. This comprehensive classification helps in navigating the often-confusing landscape of prompting methods, addressing the conflicting terminology that has emerged due to the rapid development of this field. Or in my blunt words - we have word chaos… as a mathematician, not something you want! Precision in language matters.

All text-based prompting techniques from our dataset / Schulhoff et al.

A proper Meta-Analysis

Who does not love a good meta-analysis?!

Always a great reference point for future research and you have a valuable reference point for selecting the most appropriate methods based on specific requirements.

PRISMA Review Process: The paper follows a meticulous PRISMA review process, analyzing 4,797 records and identifying 1,565 relevant papers.

2 Case Studies and some Prompt Engineering Advice

If it´s not your first time here or you follow me on Linkedin, this will not surprise you:

Language models are highly sensitive to details such as prompt duplication and the inclusion of names.

Few-shot prompting ( either overfit or go bigger), combined with chain-of-thought methods ( step by step) , typically yields the best results.

Prompt Engineering: Creating specific prompts to get accurate responses from AI. Even small changes in wording or formatting can significantly affect outcomes.

Answer Engineering: Crafting prompts to ensure responses are correctly formatted, enhancing usability and relevance.

The manual prompting process is challenging, but combining human expertise with automated optimization offers great potential.

Benchmarking Prompting Techniques: This study examines six prompting techniques using the MMLU ( Massive Multi-task Language Understanding) benchmark to see how different prompt formats affect performance. Results show that choosing the right prompt format is crucial for optimal outcomes. Notably, duplicating parts of a prompt or including personal names can significantly impact accuracy, suggesting that even minor details in prompts can be crucial.

Suicide Crisis Syndrome (SCS) Application: This case study applies prompting techniques to label Reddit posts related to SCS. It demonstrates how prompt engineering can help identify posts about suicide crises, showcasing its practical value in real-world scenarios.

Give it a read and much more important - use your knowledge!

And for the german speaking crowd, you have new dates for the Entry-level Workshop and the Real advanced stuff. English? Just ping me!

And as always - send me your problem, happy to help!